Production Sitecore 10 AWS EKS deploys - connecting VPCs

March 2021

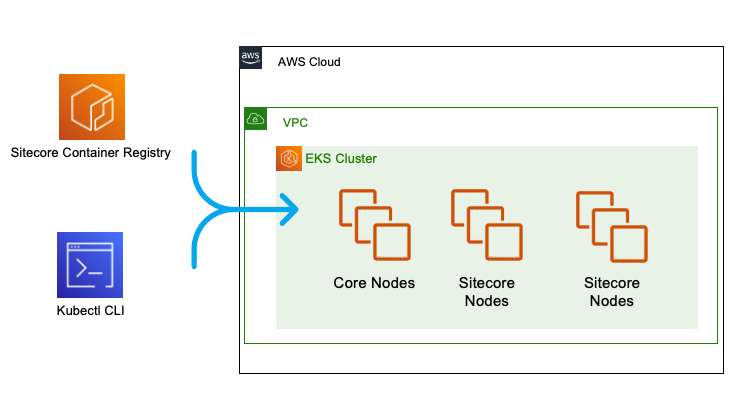

In an out-of-the-box Sitecore 10 Kubernetes install, we have one or more core nodes (the Kubernetes control plane) along with one or more Sitecore application-tier nodes, containing pods for the various Sitecore roles (CD, CM, SQL, Solr):

The next step in readying a Kubernetes-powered AWS EKS Sitecore 10 deployment for deployment is moving the data services (Solr, MS SQL) outside of the Kubernetes cluster.

info

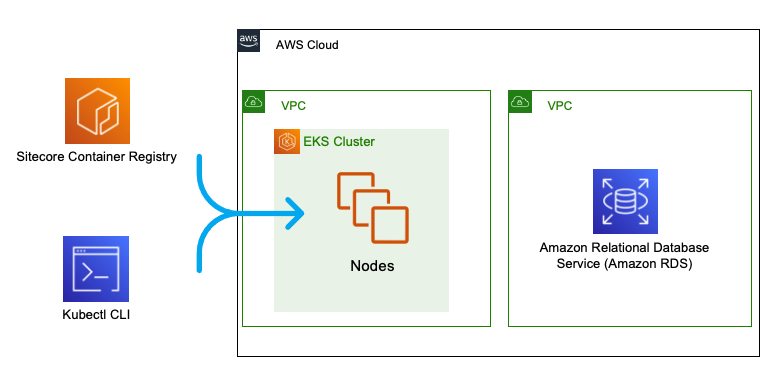

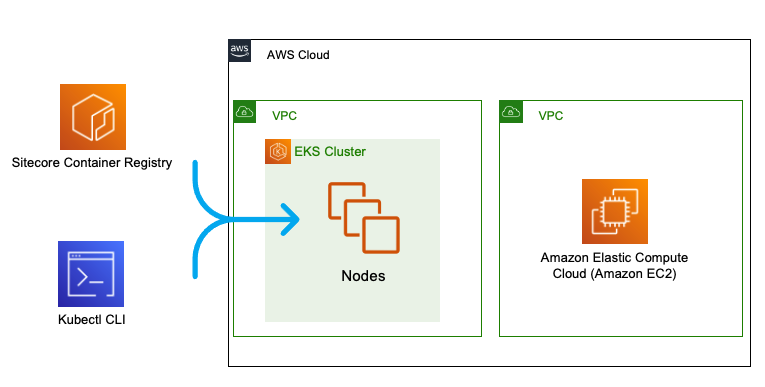

In this blog post, we look at how to separate our Sitecore 10 data services (SQL Server) from our main EKS cluster using separate VPCs

While the Kubernetes product develops a capability for running stateful workloads, it is still best practice to keep Kubernetes for your stateless compute tasks, and run databases outside of the cluster.

Options on AWS#

There are two mainstream options available to host your SQL Server instance on AWS. Both have pros and cons, and we'll quickly summarise here.

AWS RDS#

RDS is a managed database product. A SQL Server instance can be provisioned through the usual channels (AWS Console, AWS CLI) and the infrastructure management (including backups) is taken care of.

Pros#

- Low administration burden, easy scaling, easy multi-AZ setup, automated backups and snapshots

Cons#

- AWS does not grant you an administrator account with all abilities. Running the Sitecore 10 Kubernetes mssql-init job against an RDS database will surface many permissions errors.

Workarounds exist, but the main path to production deployment is still the import of an existing Sitecore database into RDS - which won't suit all workflows.

SQL Server on Amazon EC2 instances#

Both Windows and Linux instance can now run SQL Server, so this is a great fallback option for when you don't want to re-work installation processes to be compatible with RDS.

Pros#

- Full control, simply a database in the cloud

- Can use existing Sitecore 10 database initialisation methods

Cons#

- Administration burden. Backups, scaling, failover - they're all on you.

A common configuration - separate VPCs#

info

In this section, we show how to separate your cluster VPC from your MS SQL VPC

Whichever approach you choose, it is a common security and management practice to run your database workloads in a separate VPC to your EKS cluster. You may want the safety of a different security profile for your database, or monitor the VPCs using separate tooling.

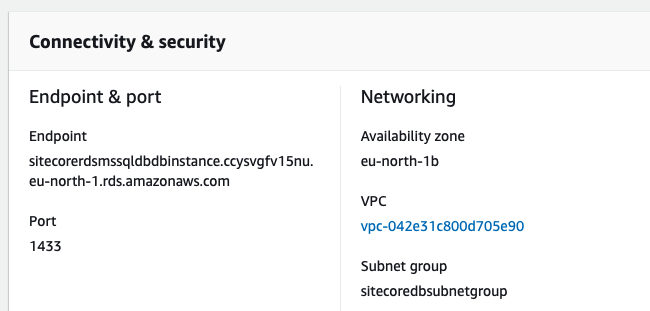

Connecting an RDS VPC to your Sitecore EKS cluster#

Create the RDS SQL instance#

1a. Install jq to Powershell - we'll use this to view the outputs of our AWS service creation

1b. Create a VPC to hold your Sitecore 10 RDS SQL Service

1c. Store the VPC ID as an environment variable for later use

1d. Create a subnet in each of two availability zones (eu-north-1a, eu-north-1b), to ensure high availability

1e. And now, the second subnet

Our VPC has a router containing a set of rules used to send network traffic arriving from our subnets. We need to associate our RDS VPC with our newly created subnets.

1f. First, get the ID of our VPC's route table:

1g. Store this as an environment variable for later use

1h. Now, associate the route table with our first subnet

1i. Associate the route table with our second subnet

1j. Our RDS database instance won't want to concern itself with individual subnets. Rather, let's create a subnet group we can later associate with RDS.

1k. Now, our network rules are all complete! The last thing we have to do is create a security group which we'll use to allow network traffic to pass through (think inbound firewall rule here)

1l. Add this newly created security group ID as an environment variable

1m. With our plumbing complete, we can finally create a SQL Server instance in RDS to hold our Sitecore databases

Creating the Sitecore EKS cluster#

2a. Now that our database instance is in-place, let's create an EKS cluster to host Sitecore, in a different VPC

2b. Once complete, head to RDS in the AWS Console, and find the Endpoint URL of our new RDS SQL instance.

We can use this Endpoint URL to create an alias within our cluster, called sql-sitecore. Using this alias, Sitecore containers can connect to our SQL Server instance using the server name sql-sitecore as if it's on a local network.

2c. Create a new Kubernetes manifest file called sql-sitecore.yaml with the following content (remembering to replace externalName with your RDS Endpoint URL)

2d. Apply this file using kubectl

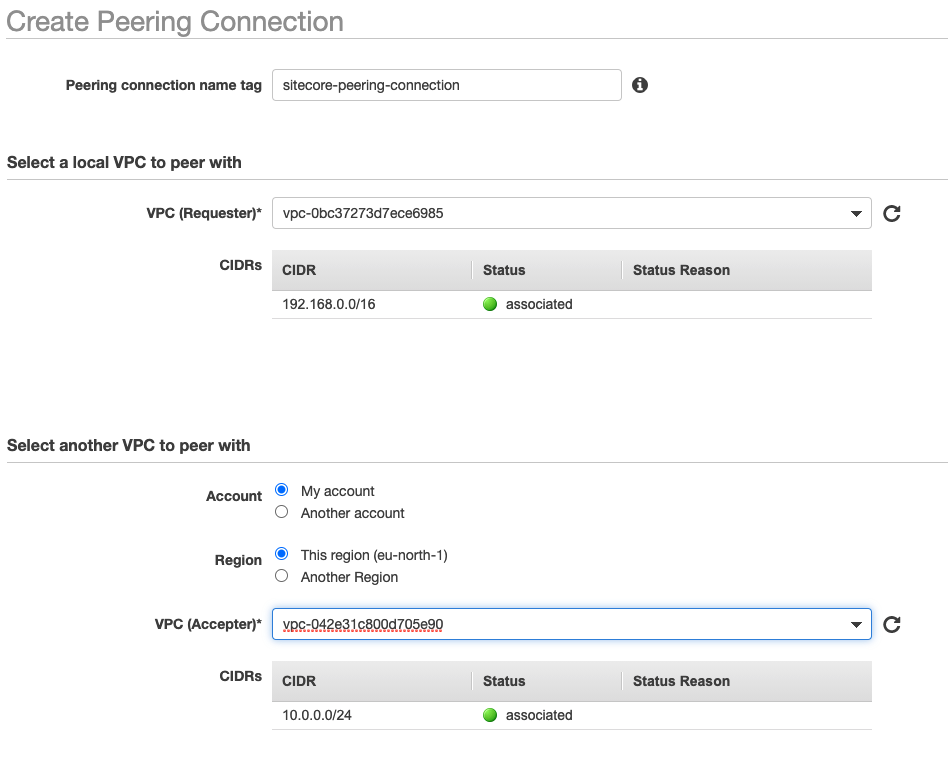

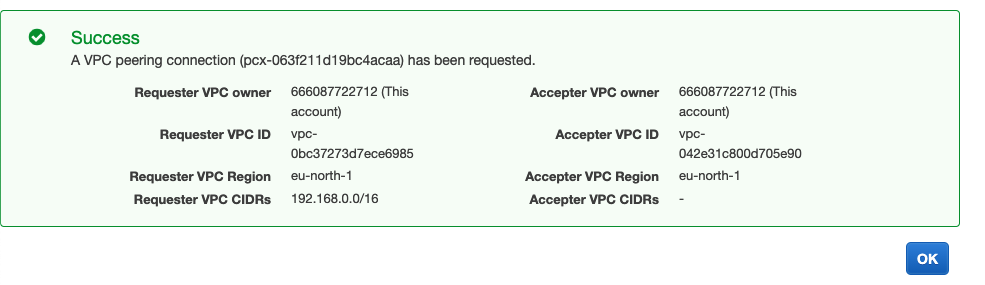

Creating the Peering Connection#

The real magic is now building a peering connection between our RDS and EKS VPCs. This allows the two VPCs to share network connections!

3a. In the AWS Console VPC section, select Create Peering Connection. Let the Requester be the EKS VPC, and the Accepter be the RDS VPC

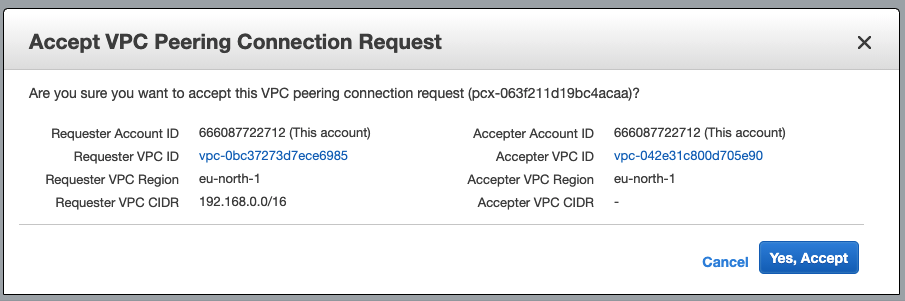

3b. Once created, right click on your new Peering Connection to Accept it.

3c. Add the Peering Connection ID as an environment variable:

Updating EKS and RDS to use the Peering Connection#

4a. Now, let's find the corresponding route table for our EKS VPC

4b. Add this ID as an environment variable

4c. Update the EKS route table to send all RDS-directed traffic through the peering connection

4d. Update the RDS route table to send all EKS-directed traffic through the peering connection

4e. Lastly, ensure port 1433 is open to traffic coming into the RDS VPC from the EKS cluster

That was a lot! Separating VPC's is not trivial, but a cloud networking good practice you're going to want to get into the habit of.